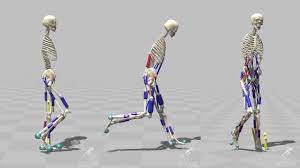

ID 2412: IOC-inspired reinforcement learning of walking with neuromusculoskeletal model

Gait simulations based on neuromusculoskeletal models are used to study the control of human movement and can help to understand the interaction between the central nervous system and the body in changing environmental or physical conditions [1]. Deep Reinforcement learning (RL) can

learn a controller without pre-defining its structure, which has the potential to provide ad-ditional insights into movement control and add to indentify control schemes for prosthesis or exoskeletons [3].

Deep RL has already been successfully applied for the generation of natural walking motion [1, 4, 6]. In RL, the agent learns a policy that maximizes the reward that it gets from the environment. However, finding a suitable reward function that leads to physiologically plausible movement remains challenging [4], and often additional experiment data is required [3, 5]. Moreover, most studies report specific reward function parameters without reporting a structured methodology behind their determination, suggesting that the terms

of reward functions are often subject to manual tuning through a process of trial and error.

Generally, it is assumed that natural walking emerges as an outcome of optimization processes by the CNS [7]. Inverse Optimal control (IOC) addresses the problem of determining these optimal cost functions. In a bi-level optimization approach, the weightings of different cost function terms are optimized such that the simulated movement matches the corresponding experimental data as closely as possible [8]. Potential cost function terms include effort minimization, stability increase, or avoidance of passive ligament torques

[7, 9]. In a recent study, it has been shown that the importance of different cost function terms varies with walking speed and regression models have been developed to predict these weightings [7].

This work ais at implementing an IOC-inspired approach to generate human walking of a neuromusculoskeletal model using deep RL. Based on an existing approach, we want to investigate if we can generate realistic human gait by designing the RL reward function based on previously determined optimal cost function terms from IOC.

The proposed work consists of the following parts:

- Get the envorinment and model running (https://github.com/martius-lab/depRL)

- Determine the optimal control terms for the musculoskeletal model and implement as the reward function

- Compare resulting kinematics & forces

Requirements

- Interest in biomechanics and reinforcement learning

- Preferably first experience with deap learning / reinforcement learning

- independent working style

Supervisors

[1] J. Weng, E. Hashemi and A. Arami: Natural Walking With Musculoskeletal Models Using Deep Reinforcement Learning, in IEEE Robotics and Automation Letters, vol. 6, no. 2, pp. 4156-4162, April 2021, doi: 10.1109/LRA.2021.3067617

[3] A. S. Anand, G. Zhao, H. Roth and A. Seyfarth: A deep reinforcement learning based approach towards generating human walking behavior with a neuromuscular model, 2019 IEEE-RAS 19th International Conference on Humanoid Robots (Humanoids), Toronto, ON, Canada, 2019, pp. 537-543, doi: 10.1109/Humanoids43949.2019.9035034

[4] Nowakowski, K., Carvalho, P., Six, J. B., Maillet, Y., Nguyen, A. T., Seghiri, I., M’Pemba, L., Marcille, T., Ngo, S. T., & Dao, T. T. (2021). Human locomotion with reinforcement learning using bioinspired reward reshaping strategies. Medical & biological engineering & computing, 59(1), 243-256. https://doi.org/10.1007/s11517-020-02309-3

[5] De Vree, L., & Carloni, R. (2021). Deep Reinforcement Learning for Physics-Based Musculoskeletal Simulations of Healthy Subjects and Transfemoral Prostheses’ Users During Normal Walking. IEEE transactions on neural systems and rehabilitation engineering : a publication of the IEEE Engineering in Medicine and Biology Society, 29, 607–618. https://doi.org/10.1109/TNSRE.2021.3063015

[6] Song, S., KidziÅ„ski, Å., Peng, X. B., Ong, C., Hicks, J., Levine, S., Atkeson, C. G., & Delp, S. L. (2021). Deep reinforcement learning for modeling human locomotion control in neuromechanical simulation. Journal of neuroengineering and rehabilitation, 18(1), 126. https://doi.org/10.1186/s12984-021-00919-y

[7] J. Weng, E. Hashemi and A. Arami: Human Gait Cost Function Varies With Walking Speed: An Inverse Optimal Control Study, in IEEE Robotics and Automation Letters, vol. 8, no. 8, pp. 4777-4784, Aug. 2023, doi: 10.1109/LRA.2023.3289088

[8] Nguyen, V. Q., Johnson, R. T., Sup, F. C., Umberger, B. R. (2019). Bilevel Optimization for Cost Function Determination in Dynamic Simulation of Human Gait. IEEE transactions on neural systems and rehabilitation engineering : a publication of the IEEE Engineering in Medicine and Biology Society, 27(7), https://doi.org/10.1109

[9] Weng, J., Hashemi, E., & Arami, A. (2022). Adaptive Reference Inverse Optimal Control for Natural Walking With Musculoskeletal Models. IEEE transactions on neural systems and rehabilitation engineering : a publication of the IEEE Engineering in Medicine and Biology Society, 30, 1567–1575. https://doi.org/10.1109/TNSRE.2022.3180690