How would Neural Networks explore the world, if we let them see through „human eyes“ ?? – New Publication

The Machine Learning and Data Analytics (MaD) Lab is pleased to announce that our latest paper “Behind the Machine’s Gaze: Neural Networks with Biologically-inspired Constraints Exhibit Human-like Visual Attention” is now published at Transactions on Machine Learning Research (TMLR)

Link to the paper (open access): https://openreview.net/forum?id=7iSYW1FRWA

GitHub Code: https://github.com/SchwinnL/NeVA

Congratulations to all authors: Leo Schwinn, Doina Precup, Björn Eskofier and Dario Zanca.

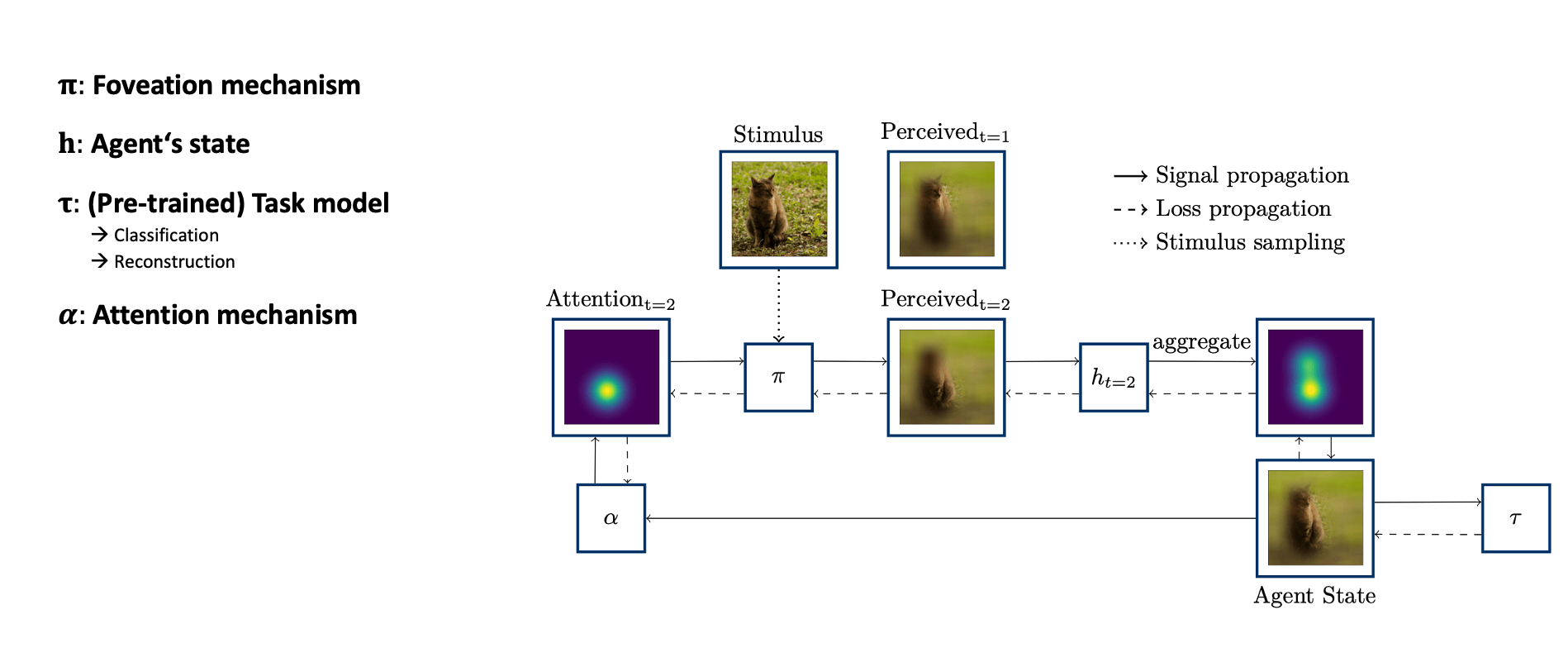

Abstract: By and large, existing computational models of visual attention tacitly assume perfect vision and full access to the stimulus and thereby deviate from foveated biological vision. Moreover, modeling top-down attention is generally reduced to the integration of semantic features without incorporating the signal of a high-level visual tasks that have been shown to partially guide human attention.

We propose the Neural Visual Attention (NeVA) algorithm to generate visual scanpaths in a top-down manner. With our method, we explore the ability of neural networks on which we impose a biologically-inspired foveated vision constraint to generate human-like scanpaths without directly training for this objective. The loss of a neural network performing a downstream visual task (i.e., classification or reconstruction) flexibly provides top-down guidance to the scanpath.

Extensive experiments show that our method outperforms state-of-the-art unsupervised human attention models in terms of similarity to human scanpaths. Additionally, the flexibility of the framework allows to quantitatively investigate the role of different tasks in the generated visual behaviors. Finally, we demonstrate the superiority of the approach in a novel experiment that investigates the utility of scanpaths in real-world applications, where imperfect viewing conditions are given.