New Paper: Identifying Untrustworthy Predictions in Neural Networks by Geometric Gradient Analysis

We are proud to announce that our last paper “Identifying Untrustworthy Predictions in Neural Networks by Geometric Gradient Analysis” has been accepted for publication at the annual conference on Uncertainty in Artificial Intelligence (UAI) 2021, one of the premier international conferences on research related to knowledge representation, learning, and reasoning in the presence of uncertainty.

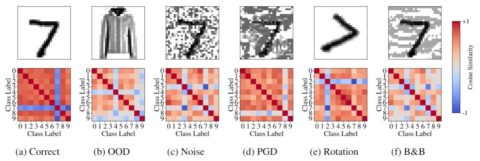

In this work, we propose a geometric gradient analysis (GGA) to improve the identification of untrustworthy predictions without retraining a given model. GGA analyzes the geometry of the loss landscape of neural networks based on the saliency maps of their respective input.

Authors: Leo Schwinn, An Nguyen, René Raab, Leon Bungert, Daniel Tenbrinck, Dario Zanca, Martin Burger, Bjoern Eskofier,